DeepSeek: Apple Researchers Explain Why It Works So Much More Efficiently and Cost-Effectively

It turns out that DeepSeek employs a broad approach within deep learning methods to extract more from computer processors by leveraging a phenomenon known as sparsity.

The world of Artificial Intelligence and the entire stock market were shaken on Monday by the sudden publicity surrounding DeepSeek, the open-source large language model developed by a China-based hedge fund. DeepSeek outperformed OpenAI’s best models in certain tasks while costing significantly less. The success of DeepSeek R1 highlights a groundbreaking shift in AI that could enable smaller labs and researchers to create competitive models and diversify the field of available options.

The Power of Sparsity

Sparsity appears in many forms. Sometimes, it involves eliminating portions of the data used by AI when that data does not significantly impact the model's output. Other times, it entails removing entire sections of a neural network if doing so does not affect the final result. DeepSeek exemplifies the latter: efficient use of neural networks.

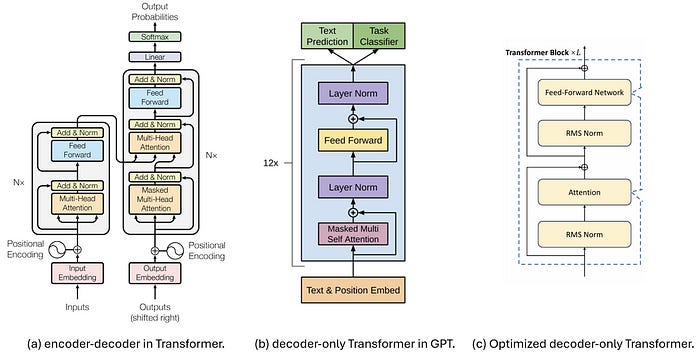

The main breakthrough that many have observed in DeepSeek is its ability to activate and deactivate large sections of the neural network’s "weights" or "parameters." These parameters determine how a neural network converts input—such as the text prompt you type—into generated text or images. Parameters directly affect computation time: more parameters typically require more computational effort.

The ability to use only a subset of a large language model’s total parameters while deactivating the rest is an example of sparsity. This can have a significant impact on an AI model’s computational budget. Apple’s AI researchers, in a report published last week, provided a clear explanation of how DeepSeek and similar approaches use sparsity to achieve better results with a given amount of computational power.

Although Apple has no direct connection to DeepSeek, it conducts its own AI research regularly. As a result, developments from external companies like DeepSeek are part of its ongoing engagement with the broader AI field.

Apple's Research on Sparsity

In their paper titled "Parameters vs FLOPs: Mixture-of-Experts Language Models," published on the arXiv pre-print server, Apple’s lead author Samir Abnar, along with other Apple researchers and MIT collaborator Harshay Shah, studied how performance changes when sparsity is exploited by turning off parts of a neural network.

Abnar and his team conducted their studies using a code library called MegaBlocks, which was released in 2023 by AI researchers from Microsoft, Google, and Stanford. However, they emphasize that their research applies to DeepSeek and other recent innovations.

The researchers posed a key question:

Is there an "optimal" level of sparsity in DeepSeek and similar models? That is, for a given amount of computational power, is there an ideal number of neural weights that should be activated or deactivated?

It turns out that sparsity can be fully quantified as the percentage of neural weights that can be turned off. This percentage approaches—but never reaches—100% of the neural network being "inactive."

Efficiency Gains Through Sparsity

One of the most significant findings is that, for a neural network of a given size, fewer parameters are needed to achieve the same or better accuracy in an AI benchmark task (such as solving math problems or answering questions) as computational resources increase.

In simpler terms, regardless of the computing power available, more portions of the neural network can be turned off while still delivering the same or even better results. REF1

As Abnar and his team put it in technical terms:

"Increasing sparsity while proportionally expanding the total number of parameters consistently leads to lower pre-training loss, even when constrained by a fixed compute budget."

In AI research, "pre-training loss" refers to how accurately a neural network processes information. A lower pre-training loss means higher accuracy.

This finding explains how DeepSeek can use less computational power yet achieve the same or even better results—simply by deactivating more parts of the network. Sparsity acts as a kind of "magic dial" that optimally matches the AI model to the available computational resources. This principle follows the same economic rule that has applied to each new generation of personal computers:

Better performance for the same cost

The same performance for a lower cost

Other Innovations in DeepSeek

Beyond sparsity, DeepSeek introduces additional innovations. For example, as Ege Erdil of Epoch AI explained, DeepSeek employs a mathematical technique called "multi-head latent attention." This method optimizes one of the biggest consumers of memory and bandwidth: the cache that stores the most recently input text of a prompt. REF2

The Bigger Picture

At its core, sparsity is not a new concept in AI research. Scientists have long demonstrated that removing parts of a neural network can yield comparable or even superior accuracy with less effort.

Intel, Nvidia’s main competitor, has identified sparsity as a key research avenue for changing the technological landscape. Meanwhile, startups leveraging sparsity-based approaches have also scored highly in industry benchmarks in recent years.

What makes sparsity so powerful is that it not only improves efficiency with a limited budget (as seen in DeepSeek) but also scales in the opposite direction:

Spending more on computing power leads to even greater benefits from sparsity.

As computing resources increase, AI model accuracy improves further.

Abnar and his team discovered that as sparsity increases, validation loss decreases across all computational budgets, with larger budgets achieving lower losses at every level of sparsity.

The Future of AI Models

In theory, this means that AI models can become larger and more powerful while maintaining efficiency, leading to better performance for the money invested. DeepSeek is just one example of a growing research field that many labs are already pursuing. Following DeepSeek’s success, many more will likely join the race to replicate and refine these advancements.

References

REF1 | Sparsity of weighted networks: Measures and applications

REF2 | DeepSeek-V3 Explained 1: Multi-head Latent Attention

About the author

Stavros Demirtzoglou (Σταύρος Δεμιρτζόγλου) is an experienced Solutions Architect and tech founder with over 20 years of expertise in software architecture, cross-functional team leadership, and digital product development. He is passionate about turning ideas into scalable solutions and driving innovation from concept to execution.

His early experience launching and scaling a technology venture in Greece provided valuable hands-on insight into managing full product lifecycles, navigating team dynamics, and aligning technical decisions with business priorities — a perspective that informs every product leadership role he takes on.